<Smarter, Better, Faster, Longer: A Modern Bidirectional Encoder for Fast, Memory Efficient, and Long Context Finetuning and Inference>

Author: Benjamin Warner et al.

Institution: Answer.AI, LightOn, Johns Hopkins University, NVIDIA, HuggingFace

Summary

- Actually, as other BERT-based models, ModernBERT model is merely a newly configurated BERT model with some modifications in model architecture.

- Key points are: RoPE, unpadding, OLMo tokenizer, sequence packing, context length extension, etc.

Introduction

- Even after the advent of many LLM models, encoder-only models like BERT are still widely being used in non-generative downstream applications.

- Especially in Information Retrieval (IR). Encoder-based semantic search is a core of RAG (Retrieval-Augmented Generatrion) pipelines.

- However, many pipelines still rely on older models like original BERT without any adjustment, despite of recent development.

So, there are many drawbacks: 512 tokens limit, bad model design and vocab size, bad performance and computational efficiency.

- Of course, many models were developed to overcome this, like these:

This research presented ModernBERT with the following contributions:

- Improved architecture for increasing downstream performance and efficiency, especially over longer sequence lengths.

- Training on modern and larger scale of data (2 trillion tokens)

- Two models: ModernBERT-base and ModernBERT-large with SOTA performance.

- Result: processing 8192 tokens, two times faster than previous models.

Methods

Architectural Improvements

Modern Transformer

- Disabled bias terms in all linear layers except for the final decoder linear layer. And disabled all bias terms in Layer Norms too.

- Used rotary positional embeddings (RoPE).

- Pre-normalization: Added a LayerNorm after the embedding layer and removed the first LayerNorm in the first attention layer.

- GeGLU activation funcdtion.

Efficiency Improvements

- Attention layers alternate between global attention and local attention.

(Every 3rd layer is global attention, others are local attentions.)- Global Attention: Every token within a sequence attends to every other token.

- Local Attention: Tokens only attend to each other within a small sliding window.

- Unpadding: Removed padding tokens to avoid wasting computation, concatenated all sequences from a minibatch into a single sequence.

Used Flash Attention’s variable length attention and RoPE implementations, allowing jagged attention masks. ModernBERT unpads inputs before the token embedding layer and optionally repads model outputs, leading to performance improvements. - Flash Attention: Provides memory and compute efficient attention kernels.

Model Design

- “Deep & Narrow” models are empirically better than “Shallow & Wide” models, so ModernBERT was designed to be as deep and narrow as possible.

- ModernBERT-base: 22 layers, 149M parameters, 768 hidden size with a GLU expansion of 2,304.

- ModernBERT-large: 28 layers, 395M parameters. 1,024 hidden size, GLU expansion of 5,248.

Training

Tokenizer

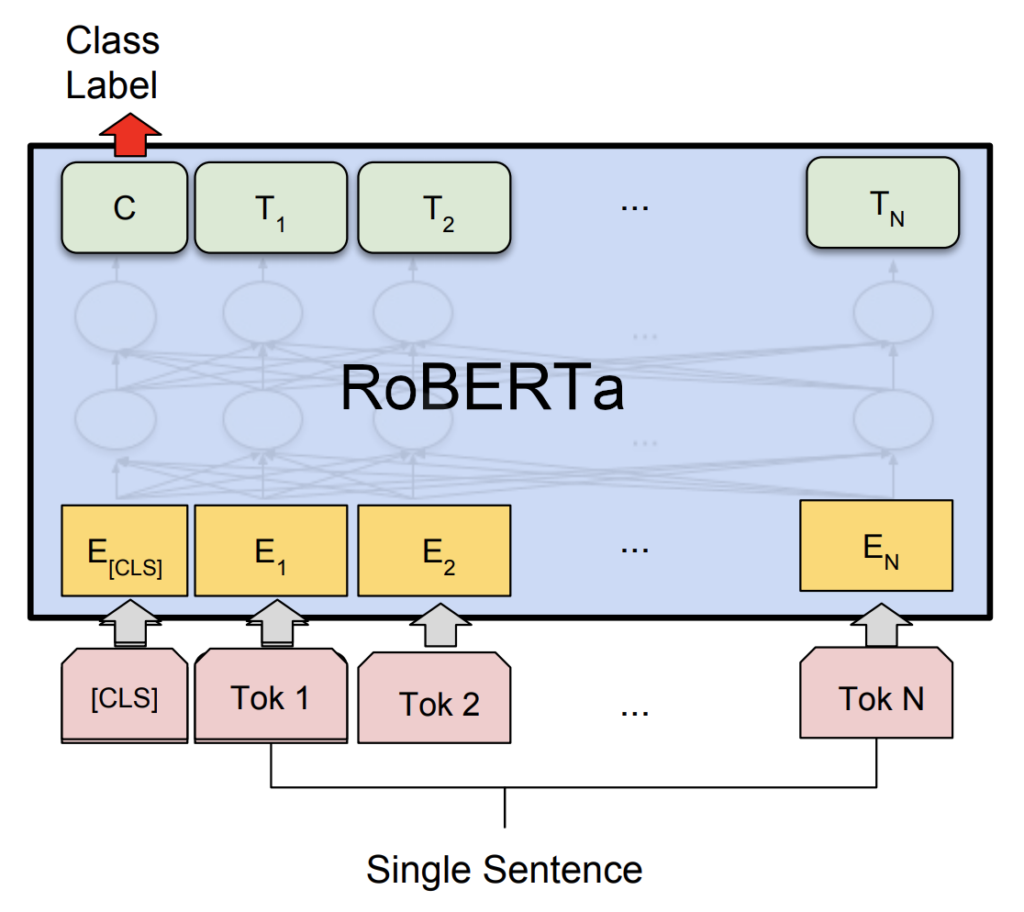

- Used a modified version of the OLMo tokenizer, rather than the original BERT tokenizer.

- Used the same special tokens ([CLS] and [SEP]) as the original BERT model.

- Vocab size: 50,368

Sequence Packing

- Because of unpadding, minibatch-size can vary. To avoid high variance, sequence packing with a greedy algorithm was used. Namely, the algorithm tries to find optimal combination of texts to ensure uniform batch size.

MLM

- Followed MLM (Masked Language Modeling) setup used by MosaicBERT.

- Removed NSP (Next-Sentence Prediction) objective, and used a masking rate of 30%.

Batch Size Schedule

- Starts with smaller accumulated batches, increasing over time to the full batch size (to accelarate training progress)

Context Length Extension

- Thanks to RoPE, which is independent of sequence length, it’s possible to vary sequence lengths.

- ModernBERT extended the context length from 1024 tokens to 8192 tokens (after training on 1.7T tokens, and trained on additional 300B tokens).

- This enables the model to capture long-range dependencies with training efficiency.

With these settings, ModernBERT showed the state-of-the-art performance with the highest training efficiency.

This is so cool! Thanks for the information 🙂