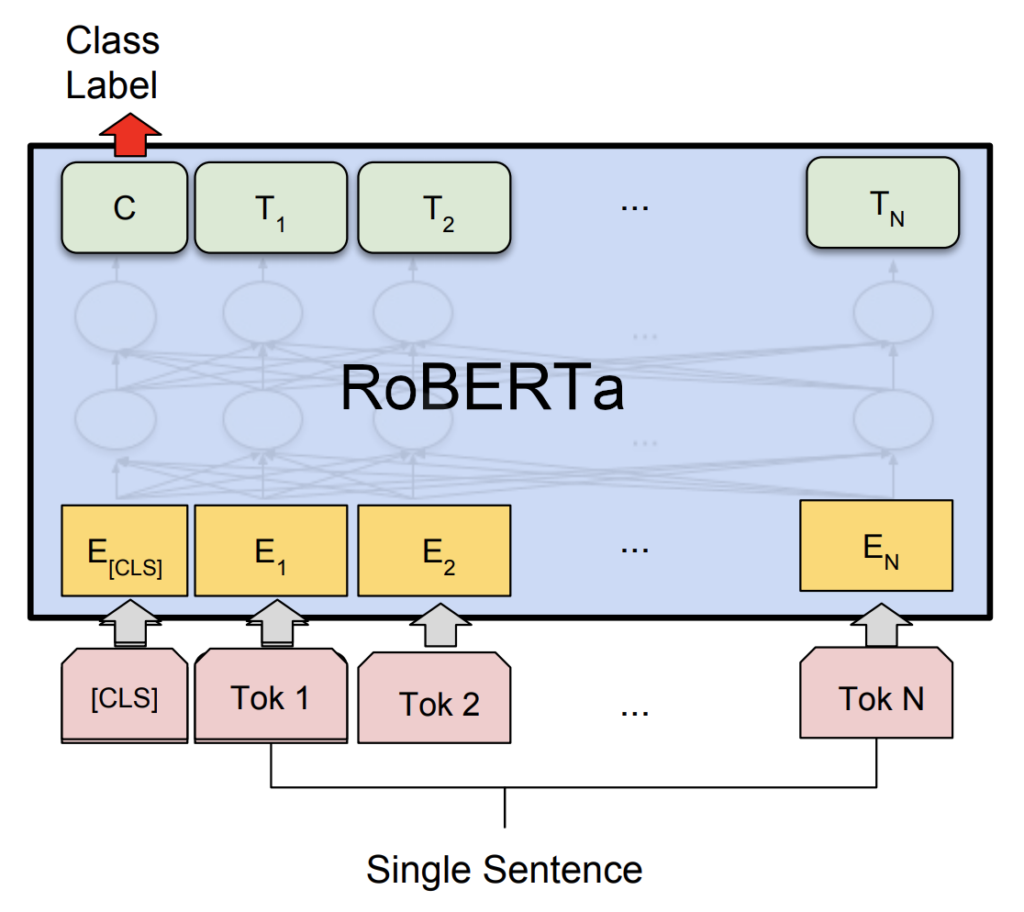

[Paper Review] RoBERTa: A Robustly Optimized BERT Pretraining Approach

Introduction Experimental Setup Training Procedure Analysis Which choices are important for pretraining BERT models? Static vs. Dynamic Masking...

[Paper Review] ModernBERT

<Smarter, Better, Faster, Longer: A Modern Bidirectional Encoder for Fast, Memory Efficient, and Long Context Finetuning and Inference>...