What is LSTM?

Before Long-Short Term Memory networks (LSTMs), traditional Recurrent Neural Networks (RNNs) suffered from limitations in learning long-range dependencies in the data – due to exploding and vanishing gradients.

LSTMs were developed to address these limitations, by improving gradient flow within the network. Let’s briefly review the structure of LSTMs.

Structure of LSTMs

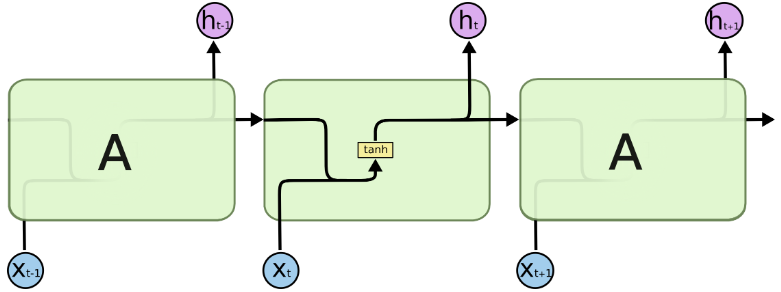

As shown in the figure above, RNNs consist of a chain of repetitive neural network modules. LSTMs follow this structure but are uniquely designed with four interacting layers to manage information flow.

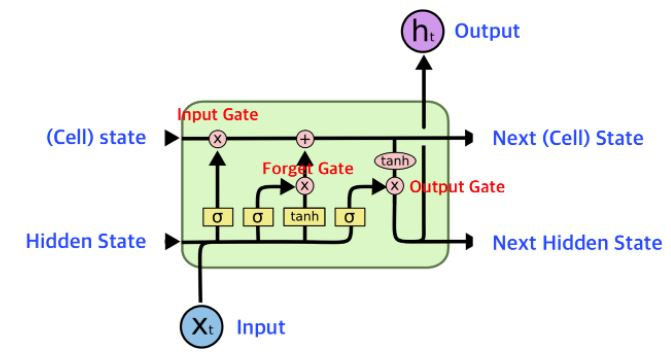

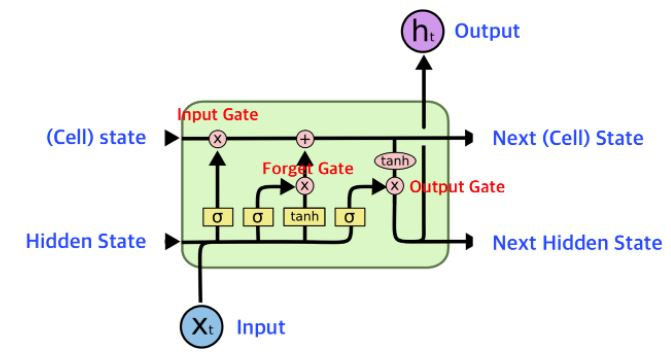

Now, let’s break down the structure of the cell.

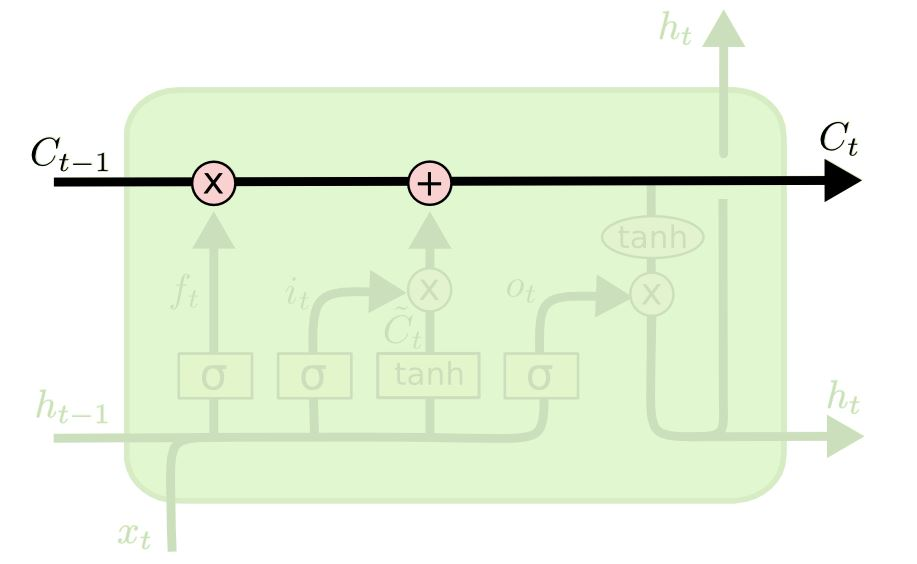

Cell State

The cell state is the heart of LSTMs. It functions like a conveyor belt, transferring information through the network with minimal changes, ensuring that gradients are preserved even over long sequences.

Information is added or removed through structures called “gates,” which learn which information to keep or discard. Here are the four key components of an LSTM cell.

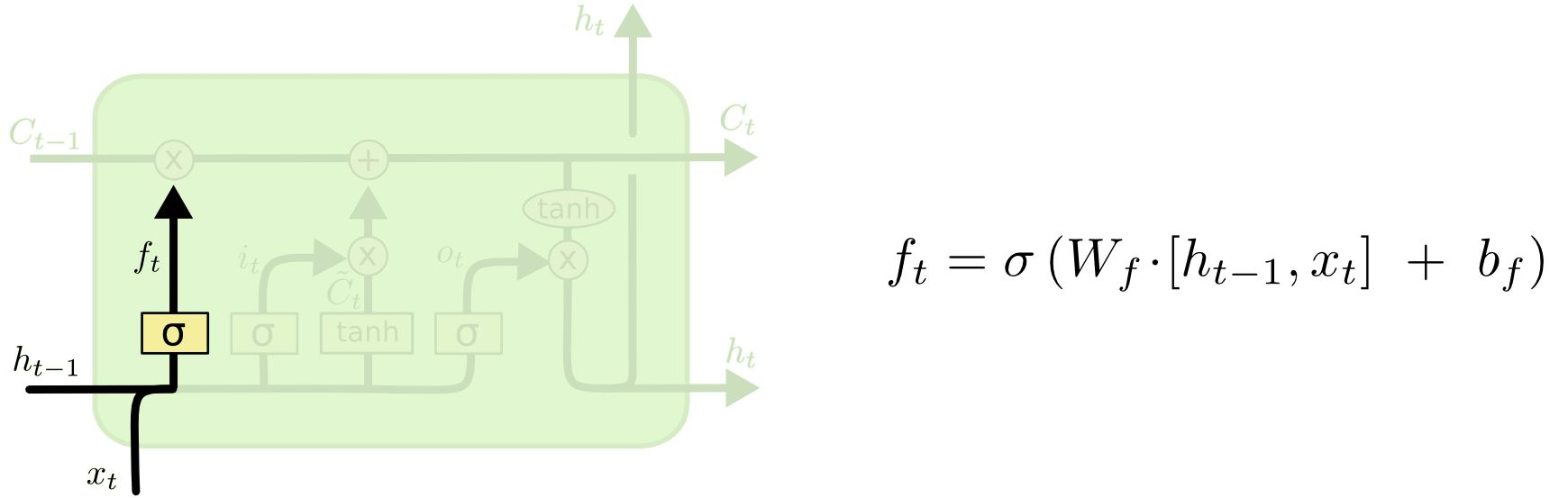

Forget Gate

The forget gate decides which information to remove from the cell state. Using the previous hidden state h and current input x, it outputs a value between 0 and 1. A value near 1 means “keep the information,” and a value near 0 means “discard it.”

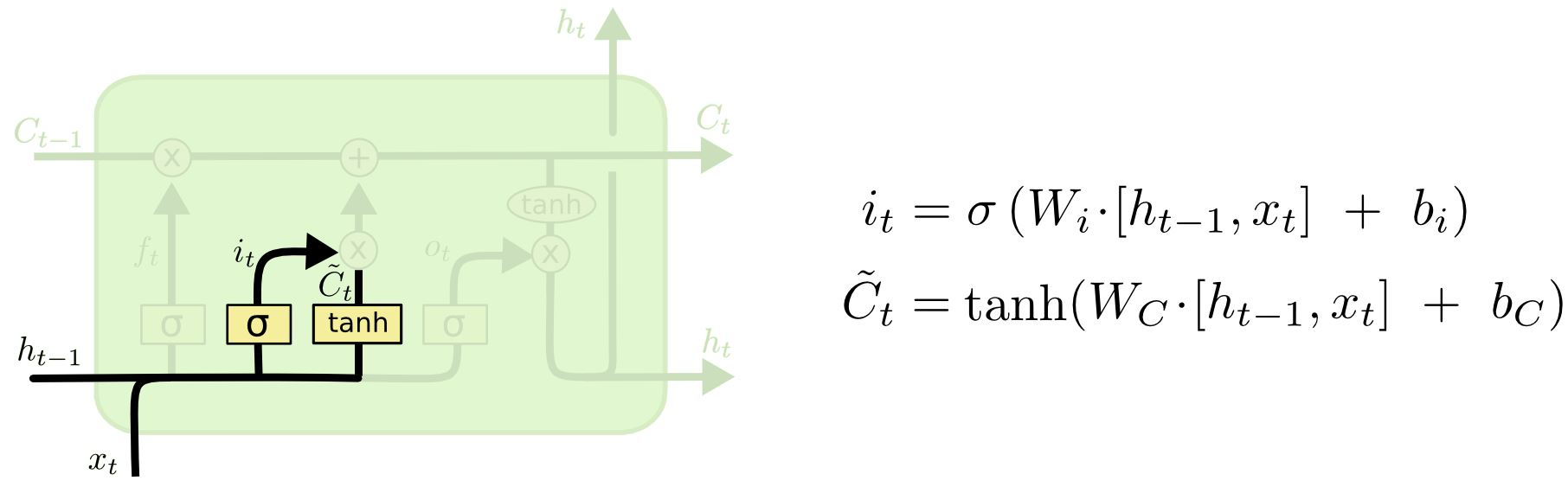

Input Gate

The input gate controls which new information should be stored in the cell state. It uses the previous hidden state and current input to determine what to update.

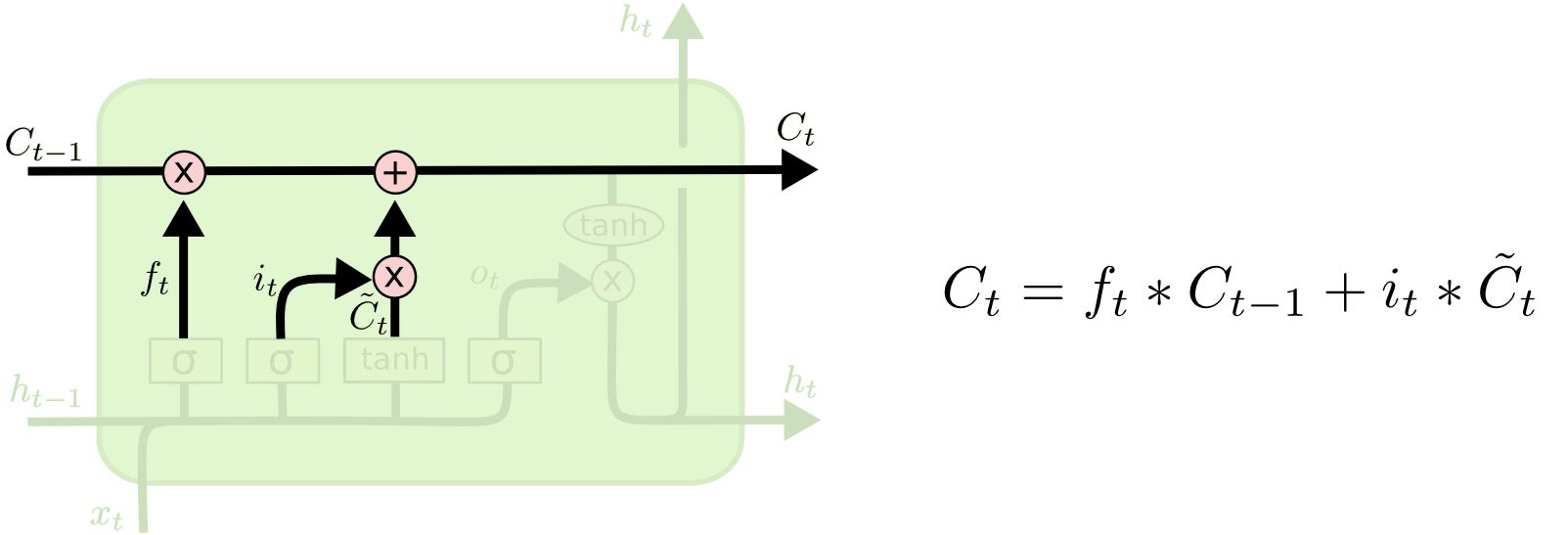

Update

The cell state is updated by combining the previous cell state and the new candidate values from the input gate. This is where the LSTM decides how much to forget from the past and how much new information to add.

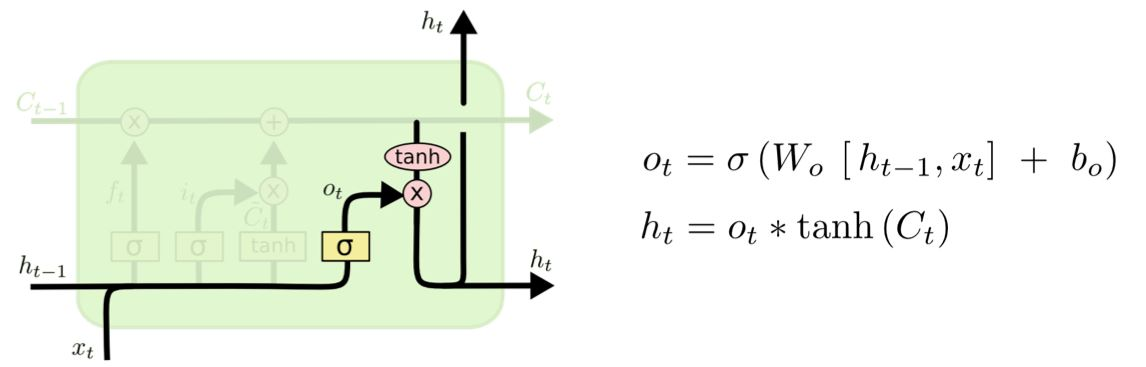

Output Gate

The output gate determines the next hidden state, which will be passed to the next time step and potentially used as output.